“In practice, mathematicians prove theorems in a social context. It is a socially conditioned body of knowledge and techniques.”

William Thurston, a Fields Medalist of 1982 for his work on Haken manifolds, wrote a wonderful 1994 essay “On Proof and Progress in Mathematics”, describing his vision for how to do mathematics. Thurston advocated a free-form, intuitive style of mathematical discourse with less emphasis on conventional proofs, in part a reaction to the formal style in which he was trained, which emphasized rigorous proofs at the expense of exposition. Thurston is a gifted mathematician; his instincts about what is true in mathematics were often described as remarkable. “He kind of had a truth filter; …his mind rejected false mathematics,” recalled one of Thurston’s students. Thurston believed that mathematicians needed to improve their ability to communicate mathematical ideas rather than just the details of formal proofs. This human understanding was what gave mathematics not only its utility but its beauty.

In truth, the increasing complexity of modern mathematics today is already bumping up against the limits of human understanding. A case in point is Andrew Wile’s 109-page proof of Fermat’s Last Theorem, corrected and published in 1995 in the Annals of Mathematics after two years of intensive peer review by a small number of mathematicians who were capable of fully understanding at that time all the details of what Wile has done, 358 years after it was conjectured. Apparently Fermat had underestimated the length of this marvelous proof by a rather wide margin.

Practitioners are increasingly relying on computers to test conjectures, visualize mathematical objects and construct proofs. On August 10, 2014, Thomas Hales announced the completion of a computer-verified proof of the Kepler conjecture, a theorem dating back to 1611 that describes the most space-efficient way to pack spheres in a box (i.e., commonly known as the “fruit-packing problem”). What is perhaps unusual is that 15 years ago, Hales had already presented a computer-assisted proof that Kepler’s intuition was correct. Hales’ original proof in 1998 involves 40,000 lines of custom computer code and runs to 300 pages. It took 12 reviewers four years to check for errors. Even when the 121-page proof was finally published in the Annals of Mathematics in 2005, the reviewers could say only that they were “99 percent certain” the proof was correct; they were all too exhausted from checking the proof any further.

But not this time. Hales’ new proof was verified by a pair of “formal proof assistants” – software programs developed as part of the Flyspeck Project – named Isabelle and HOL Light. In effect, what Hales had done was to transform his earlier paper into a form that could be completely checked by machine – in a mere 156 hours of runtime. “This technology cuts the mathematical referees out of the verification process,” said Hales. “Their opinion about the correctness of the proof no longer matters.” Score one for the machines – in proof checking.

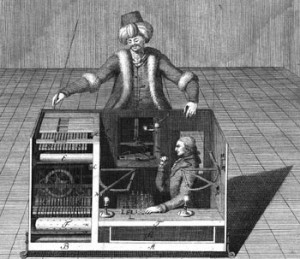

Not everyone wants to be a mathematician. Some might just want to play chess, for example – with a computer. Garry Kasparov came up with the idea of “collaborative chess” after he was defeated by Deep Blue in a 1997 rematch. In what is now called “freestyle” chess, humans are allowed unrestricted use of computer during tournaments. The idea is to create the highest level of chess ever played, a synthesis of the best of man and machine. Kasparov played the first public game of human-computer freestyle chess in June 1998 in León, Spain against Veselin Topalov, a top-rated grand master.

Each used a regular computer with off-the-shelf chess software and a historical database of hundreds of thousands of chess games, including some of the best ever played. The man-machine hybrid team is often called a “centaur,” after the mythical half-human, half-horse creature. Topalov fought Kasparov to a 3-3 draw, even though Kasparov was the stronger player and had trounced Topalov 4-0 a month before. The centaur play evened the odds. Kasparov's advantage in calculating tactics had been nullified by the machine.

“Human strategic guidance combined with the tactical acuity of a computer,” Kasparov concluded, “was overwhelming.” Having a computer partner meant never having to worry about making a tactical blunder. The humans could then concentrate on strategic planning instead of spending so much time on calculations. Under these conditions, human creativity was even more paramount. “In chess, as in so many things, what computers are good at is where humans are weak, and vice versa,” observed Kasparov. “Weak human + machine + better process was superior to a strong computer alone and, more remarkably, superior to a strong human + machine + inferior process.”

But machines are rapidly encroaching on skills that used to belong to humans alone. For example, as Tyler Cowen recently noted, the turning point in freestyle chess may be approaching as centaurs are starting to lose ground against the Bots. This phenomenon is both broad and deep, and has profound economic implications. It is very likely that progress in information technology will exceed our expectations and surprise us in unexpected ways. Are the days far off before machines would start building hypotheses all by themselves – unassisted by humans – which they in turn can proceed to test automatically? It makes us wonder: “what are humans still good for?”

References:

- Thurston, William (1994, April). On Proof and Progress in Mathematics. Bulletin of the American Mathematical Society, Vol. 30, No. 2, pp. 161-177. Retrieved from: http://www.ams.org/journals/bull/1994-30-02/S0273-0979-1994-00502-6/S0273-0979-1994-00502-6.pdf

- Hales, Thomas (2012). Dense Sphere Packings: A Blueprint for Formal Proofs. Cambridge University Press. Retrieved from: https://code.google.com/p/flyspeck/source/browse/trunk/kepler_tex/DenseSpherePackings.pdf

- Ellenberg, Jordan (2014, August 29). Will Machines Take Over Mathematics? Wall Street Journal. Retrieved from: http://online.wsj.com/articles/will-machines-put-mathematicians-out-of-work-1409336701

- McClain, Dylan Loeb (2005, June 21). In Chess, Masters Again Fight Machines. The New York Times. Retrieved from: http://www.nytimes.com/2005/06/21/arts/21mast.html

- Mueller, Tom (2005, December 12). Your Move. The New Yorker, pp. 62-69. Retrieved from: http://www.tommueller.co/s/New-Yorker-Your-Move.pdf

- Kasparov, Garry (2007). How Life Imitates Chess. Macmillan.

- Freeman, Richard B. (2014, May). Who Owns the Robots Rules the World. IZA World of Labor. Retrieved from: http://www.sole-jole.org/Freeman.pdf

- Thompson, Clive (2013). Smarter Than You Think: How Technology is Changing Our Minds for the Better. Penguin Press.

- Cowen, Tyler (2013). Average is Over: Powering America Beyond the Age of the Great Stagnation. Dutton Adult.

- Brynjolfsson, Erik and McAfee, Andrew (2012). Race against the Machine: How the Digital Revolution is Accelerating Innovation, Driving Productivity, and Irreversibly Transforming Employment and the Economy. Digital Frontier Press.